What threats really count

It's not about cyber tech stupid. It's about your business leadership man.

Photo by Anna Tarazevich

What risks really count for your business?

No question is more important for implementing an effective program of cybersecurity for your business. The management board, IT and security practioners cannot expect to mitigate risk effectively without knowing the sources and cost of threats to the organization.

A modern organization depends on its information processing systems in order to conduct business.

The prevailing security model predicates defense in depth of these systems. The most common strategies are to mitigate risk with network, cloud and application security products.

These products are reactive countermeasures; blocking network ports and services, detecting known application exploits, or by blocking entry of malicious code to the network.

Are your security products likely to be effective in the long-term?

Can attacks on your business be neutralized with defensive means only?

In other words, is there a “black-box” security solution for the business?

The answer is clearly no.

A reactive network defense tool such as a firewall cannot protect exploitation of software defects and an application firewall is no replacement for in-depth understanding of company-specific systems or system configuration vulnerabilities.

In this article, I introduce a methodology called Business Threat Modeling.

Business Threat Modeling is a continuous threat assessment process for software development organisations that employs a systematic risk analysis of complex software systems along with quantitative evaluation of how well wremoving software defects reduces risk.

Business Threat Modeling is based on four basic tenets, that are discussed at greater length in this article. The four tenets are:

Security assessment of complex software systems

Quantitative evaluation and financial justification for security countermeasures

Explicit communications between developers and security

Sustain continuous risk reduction

THE PROBLEM: DEFECTIVE SYSTEMS ARE INSECURE SYSTEMS

This seemingly obvious observation is graphically borne out in 2 large-scale examples of data breaches: WannaCry andTarget.

WannaCry ransomware - the NSA was keeping it a secret

A single vulnerability can be exploited in large numbers if left unpatched in widely-used software.

The WannaCry ransomware outbreak in 2017 was largely due to an unpatched vulnerability in Microsoft Windows, affecting hundreds of thousands of computers globally.

WannaCry was initially discovered by the U.S. National Security Agency (NSA), and codenamed “EternalBlue”.

The timeline after the NSA discovery shows an interesting chain of events.

The U.S. National Security Agency (NSA) did not publicly disclose the "EternalBlue" vulnerability. Instead, it was leaked by a hacking group called "The Shadow Brokers."

In April 2017, The Shadow Brokers released a set of hacking tools, including the EternalBlue exploit, that they claimed were stolen from the NSA. Shortly after the leak, Microsoft released a patch (MS17-010) for the vulnerability on March 14, 2017. This was about two months before the WannaCry ransomware attack began on May 12, 2017.

Given the timeline, Microsoft's patch was available before the WannaCry attack started, but many systems remained unpatched and were thus vulnerable to the ransomware.

It's not clear how Microsoft became aware of the vulnerability before the Shadow Brokers leak, but some have speculated that the NSA informed Microsoft about it once they realized there was a risk of the exploit being leaked or discovered.

Target - stealing 40M payment cards via the air-conditioning systems

The Target data breach that occurred in 2013 compromised the payment card data of 40 million Target customers. The attack was a result of a complex series of events:

Initial Entry through a Third Party: The attackers initially gained access to Target's network by stealing credentials from a third-party HVAC vendor, Fazio Mechanical. It is believed that the credentials were stolen through a spear-phishing attack on Fazio Mechanical, making phishing a distant origin of the breach but not the direct cause of Target's breach.

Movement within the Network: After gaining access to Target's network using the HVAC vendor's credentials, the attackers were able to move laterally within the network, eventually accessing the point-of-sale (POS) system.

Malware Installation: The attackers then installed malware on the POS systems to scrape and transmit credit card data before it was encrypted and sent to the payment processor.

Exfiltration: The stolen data was periodically collected by the attackers and sent to external servers.

So, while phishing played a role in the initial stages by compromising a third-party vendor, it was not the direct method used to breach Target's systems.

The breach underscored the importance of taking a systems approach to protecting transaction processing systems, including securing third-party vendor access and ensuring robust network security segmentation (i.e. making it impossible for attackers in the HVAC network to get on the stores network).

Software vulnerabilities - this has been going on for over 25 years

The Carnegie Mellon Software Engineering Institute (SEI) reports that 90 percent of all software vulnerabilities are due to well-known defect types (for example using a hard coded server password or writing temporary work files with world read privileges). All of the SANS Top 20 Internet Security vulnerabilities are the result of “poor coding, testing and sloppy software engineering”. See the latest SANS Critical Information Security 2023 controls here.

Why don't organizations do more to improve their production systems quality?

Let’s examine commitment to quality at three levels in an organization: end-users, development managers and senior executives.

Users are conditioned to accept unreliable software on their desktop.

Development managers are inclined to accept faulty software as a tradeoff to meeting a development schedule.

Senior executives, while committed to quality of their own products and services, do not find security breaches sufficient reason to become security leaders with their enterprise systems because:

They usually receive conflicting proposals for new information security initiatives with weak or missing financial justifications.

The recommended security initiatives often disrupt the business.

The need to understand operational risk

Network and application security products are reactive means used to defend the organization rather than proactive means of understanding and reducing operational risk.

Today’s defense in depth strategy is to deploy multiple tools at the network perimeter such as firewalls, intrusion prevention and malicious content filtering.

The defense-focus is primarily on outside-in attacks, despite the fact that the majority of attacks on customer data and intellectual property are inside out. The notion of trusted systems inside a hard perimeter has practically disappeared with the proliferation of Web services, SSL VPN and convergence of application transport to HTTP.

A reactive network defense tool such as a firewall cannot protect exploitation of software defects and cloud application security that relies on databases of vulnerabilities is no replacement for in-depth understanding of specific source code or system configuration vulnerabilities.

In the past few years - the defense-focus has grown quickly to protecting data and software running in the cloud. And - yes - even the multi-billion dollar cloud security market, dominated by Israeli cybersecurity vendors like WIZ, is still a reactive approach to protecting the business.

THE OBJECTIVE: COST EFFECTIVE SYSTEM DEFECT REDUCTION

It is rare to see systematic defect reduction projects in production software running in the enterprise, apparently, if it were easy, everyone would be doing it. So what makes it so hard?

Current development methodologies (including Agile) used by internal development teams are a bad fit for threat analysis of production software systems.

The cost of finding and fixing a bug in a production system is regarded as too high.

The application developers and IT security teams don’t usually talk to each other. The larger the organization, the more they lose when information gets lost in the

cracks.

We can meet these challenges in a cost-effective way by establishing three tenets:

Use a risk analysis process that is suitable for production software systems.

Collect data from all levels in the organization that touch the production system and classify defects for risk mitigation according to standard vulnerability and problem types.Provide executives with financial justification for defect reduction.

Quantify the risk in terms of assets, software vulnerabilities, and the organization’s current threats.Require the development and IT security teams to start talking.

Explicit communications between software developers and IT security can be facilitated by an online knowledge base and ticketing tool that provide an updated picture of well-known defects and security events.

SECURITY ASSESSMENT OF COMPLEX SYSTEMS (TENET #1)

Overview

The Business Threat Modeling process identifies, classifies and evaluates software vulnerabilities in order to recommend cost-effective countermeasures. The process is iterative and its steps can run independently, enabling any step to feed changes into previous steps even after partial results have been attained.

Continuous review of findings is key to success of the project. For example, an end-user may point-out fatal flows in an order entry form to the VP engineering during the Validate Findings step and influence the results in the Classify Vulnerabilities and Build the threat model steps.

1. Set scope.

The first step is to determine scope of work in terms of business units and assets. Focus on a particular business unit and application functions will improve the ability to converge quickly. The process will also benefit from executive level sponsorship that will need to buy into implementation of the risk mitigation plan.

The team members are chosen at a preliminary planning meeting with the lead analyst and the project’s sponsor. There will be 4-8 active participants with relevant knowledge of the business and the software. The team is guided by expert risk analysts that have good people skills and patience to work in a chaotic process.

The output of Set Scope is one page:

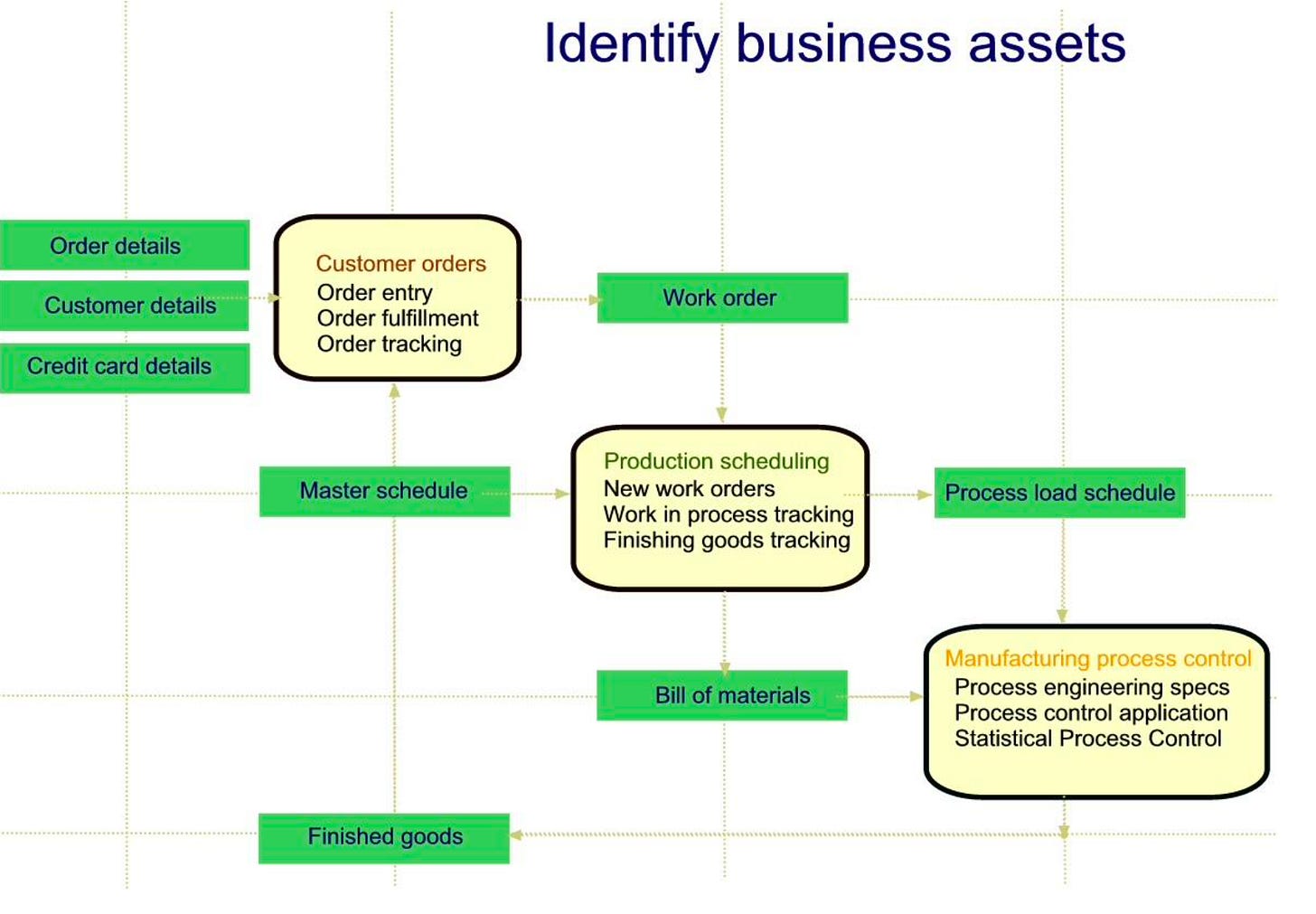

2. Identify business assets

In step 2, the team identifies operational business functions and their key assets:

This part of the process can be done using wall-charts as shown in the below figure. The graphic format helps the team visualize the scope of assets and estimate potential impact of threats on assets.

Business functions (white boxes) are placed on a diagonal from top left to bottom right as shown in the below figure. Assets (colored in green) flow clockwise around the diagonal of business functions.

3. Identify application software components

After identifying business functions in Identify business assets, the team now identifies application software components (but doesn’t assess vulnerabilities) using two sub-steps:

a.Identify application functions that serve the business function

b.Decompose application functions to software components

In order to help build a consistent, reasonably high-level view of the system, this part of the process can be done using wall-charts as shown in the below figure.

Application functions (white boxes) are placed on a diagonal from top left to bottom right as shown in the below figure. Decomposed components (colored in tan) flow clockwise around the diagonal of application functions.

4. Classify the software vulnerabilities.

NIST - The American National Institute of Standards provides open access a system of classifying software vulnerabilities called CVSS - Common Vulnerabilities Scoring System.

In our methodology, CVSS scores are computed for each component identified in the Identify software components step. In addition to the CVSS score, we collect an additional field, the problem type category, for example ”Use of hard-coded password”.

The knowledge base supporting the process contains a baseline of classified software vulnerabilities and evolves over time as the team classifies new vulnerabilities. Various source code scanners may also be used in this step, for example – FindBugs to find problems in Java source code.

5. Build the threat model

The team now builds a threat model.

The threat model is a database of assets, threats, vulnerabilities and countermeasures.

Maintaining the threat model in a database provides the analytical capability to calculate risk and recommend the most cost-effective countermeasures based upon asset value, threat probability of occurrence, exploited vulnerabilities and percent damage to the assets during an attack.

Assets collected in the Identify business assets step are assigned a financial value. Threats are named and classified as to their probability of occurrence and damage levels. Vulnerabilities that were collected in the Classify the vulnerabilities step are associated with threats.

6. Build the risk-mitigation plan

In step 6, the team specifies countermeasures for vulnerabilities found in the software components and records them in the threat model database. While the best countermeasure for a problem is fixing it, in reality there may not be documentation and the programmers who wrote the code are probably in some other job. This means that other means may be required, such as code wrappers or application proxies.

The possible types of countermeasures are Retain, Modify and Add as seen in the below figure:

Retain the existing component (leave the defects in place) or,

Modify the component (fix the defect or put in a workaround) or,

Add components (for example call an identity provider service to authenticate on-

line users instead of using a proprietary customer table).

Each countermeasure is assigned a cost and mitigation level. The cost may

be a combination of fixed and variable cost in order to describe a one time cost of fixing a problem and ongoing maintenance cost.

7. Validate findings

This extremely important step validates the current findings with expert/relevant players in the enterprise.

The objective is to use all means at the disposal of the team to qualify components and vulnerabilities as to where (they are in the system), which (assets are involved), what (they do now and in the past), why (they perform the way they do) and when (a component is initialized and activated).

Conceptually, no limits are placed on what questions can be asked. Users may downgrade low-risk software components and escalate others for priority attention. They may add or remove assets from the model and argue parameters such as probability, asset value, estimated damage etc.

For example, a server-side order confirmation script that sends email to the customer may have received a low CVSS score in Classify the software vulnerabilities. The team can simply decide to eliminate that vulnerability from the list during Validate findings.

QUANTITATIVE EVALUATION AND FINANCIAL JUSTIFICATION (TENET #2)

The output of the process provides executives with financial justification for an effective risk mitigation plan.

After specifying security countermeasures, the PTA risk-reduction optimization algorithm produces a prioritized list of the countermeasures in financial terms. The risk-reduction algorithm combines the most cost-effective countermeasures to reduce the overall system risk level to a minimum at a total fixed and variable cost.

EXPLICIT COMMUNICATIONS BETWEEN DEVELOPERS AND SECURITY (TENET #3)

The first order of business is having people talk to each other and argue the issues. By publishing CVSS scores and countermeasure costs, the developer and security teams can be confident that they can respond to a particular type of event in a consistent fashion.

We have found in our practice with clients, that online collaboration tools (such as Slack or Wiki) can help give the company a clear, updated picture of well-known defects and security events. There is an abundance of such tools – and a discussion is outside the scope of this article.

What are the requirements?

An online collaboration tool should support continuous enterprise risk analysis and management. The tool should enable building a knowledge base and tracking open issues.

The knowledge base contains standard CVSS scores for components and CLASP problem types classifications is always available for the entire organization. Users can add new entities and modify scores as the business environment changes.

The issue tracker provides:

A consistent thread of requests, changes and open action items during the risk analysis process and in particular in the Validate findings step

Updated implementation status of countermeasures.

Unlike email, issues cannot get lost or be ignored!

SUSTAINING CONTINUOUS RISK REDUCTION

Training a team that can sustain quality

While the Business Threat Modeling process itself has educational benefit, there is no question that the quantity and complexity of production systems in a large organization requires skills for continuous risk analysis and defect reduction.

To meet this objective, we offer two courses: The first - “Business Threat Modeling for the enterprise” is a two day course that trains analysts and qualifies them to run a defect reduction process as described in this paper. An advanced course will qualify analysts, who have conducted three risk analysis projects, to teach the “Business Threat Modeling for the enterprise” course and coach others.

Improving best practices in the software development life cycle

The risk analysts supporting the process, together with the knowledge base are a significant resource for any organization that wants to evaluate the economic feasibility of a defect reduction program and improve best practices in the software development life cycle:

How to reduce avoidable rework

How to reliably identify fault-prone modules in a company's particular operation

How to identify modules with the most impact on system reliability and downtime

Develop sustaining metrics for defect reduction

Train application programmers in best security practices and help them see

themselves as part of an integrated company-wide commitment to quality

software.

Help the organization choose and implement disciplined practices such as Watts

Humphrey's PSP (Personal Software Process) and TSP (Team Software Process) that can have high ROI in defect reduction in new software development.

A little bit of fear is a good thing

A CISO (Chief Information Security Officer) has a team and a budget to protect the business.

But - at the end of the day, the CEO cannot delegate fire and forget orders to the CISO. The CEO herself and her entire executive team must be committed to understanding what threats count and how best to mitigate them in an effective manner.

In today’s complex threat environment - we need a little bit of fear.

A little bit of fear will go a long way to staying alert and keeping the organization alert and protected to cyber attacks like WannaCry and Target.